I think the new era of LLM (Large Language Model) AI/ML.

But, here’s one of the many things I think everyone is overlooking. A lot of content on the web (and code, etc) is now being generated by ChatGPT so at a certain point, LLM’s are going to be using past LLM generated text to generate new text.

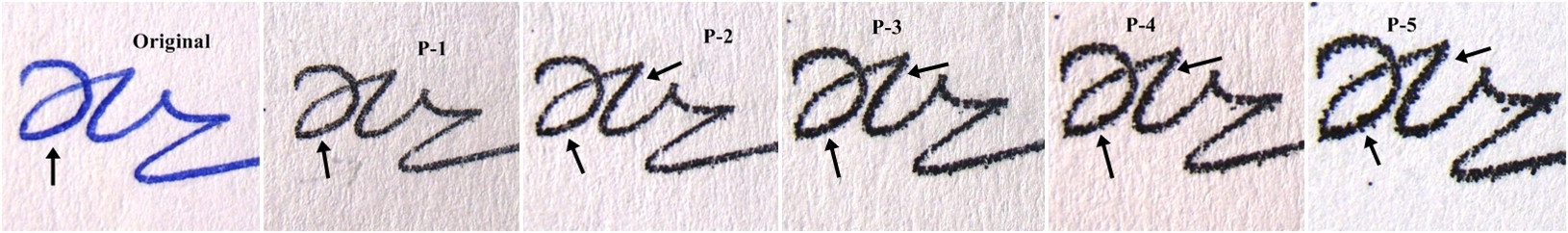

This is a problem humanity has faced before; back in the days of photocopiers you’d face content quality issues when photocopying a photocopy:

So, let’s imagine that ChatGPT, like human memory, is 90% accurate and we figure that’s “good enough”. But then ChatGPT content based on prior ChatGPT content is now 81% accurate, and the next iteration of diminishing returns is 72.9% accurate. And so on. We’re already at a point where we can’t actually definitely know the output based on the input (ChatGPT is a black box) so those diminishing returns become very quickly concerning because we cannot definitively say its 100% accurate.

Here’s an example more relevant to software engineering; let’s say ChatGPT is used to write code, the code is never reviewed by a good software engineer, and that code does not encrypt user emails in the DB (encryption at rest is best practice these days IMO). Later on ChatGPT is used, again, to write more code or build on the existing code and it includes a bug that exposes users’ emails to the public; that email is left unencrypted because faulty code is written on top of faulty code without review by a software engineer nor technical debt is addressed.

I know I’m saying “I’m a software engineer concerned about my job b/c of AI” but I’ve thought this through and used examples, this is not simply unfounded fear and anger at technology advancement. This is, IMO, a case of the public falling for marketing and adopting unknown/undocumented technology too quickly.

I look forward to advancing the field and working on sound solutions!